We are near the dawn of a new workload: BigData. While some people say that “it is always darkest just before the dawn”. I beg to differ: I think it is darkest just before it goes pitch black. Have a cup of wakeup coffee, get your eyes adjusted to the new light, and to flying blind a bit, because the next couple of years are going to be really interesting.

In this post, I will be sharing my views on where we have been and a bit about where we are heading in the enterprise architecture space. I will say in advance that my opinions on BigData are just crystalizing, and it is most likely that I will be adjusting them and changing my mind.

Yet, I think it will be useful to go back in history and try to learn from it. Bear we me, as I reflect on the past and walk down memory lane.

The Dawn of Computing: Mainframes

If you were to look at the single most successful computing platform of ancient times (that would be before 1990), you are stuck with the choice between the Apple II, the C64 or the IBM S/360 mainframe. The first two are consumer devices, and I may get back to those in another blog post. Today, let us look at the heavy lifting server platforms, since we are after all going to talk about data.

Under the guidance of

Fred Brooks, IBM created one of the most durable, highly available and performing computing platforms in the history of mankind. Today, mainframes are challenged by other custom build supercomputers, x86/x64 scale-up platforms and massive scale out systems (ex: HADOOP). But even now, the good old S/360 still holds on to a very unique value proposition. No, it is not the fact that some of these machines almost never need to reboot. It is not the prophetic beauty of JCL (a job scheduler that “gets” parallelism) or the intricacies of COBOL or PL/I…

In fact, it is not the mainframe itself that gives it an edge, it is the idea of MIPS: Paying for compute cycles!

When you pay for compute cycles, every CPU instruction you use counts. The desire to write better code goes from being a question of aesthetics, to a question about making business sense (i.e. money). Good programmers, who can reduce MIPS count, can easily visualize their value proposition to business people, and justify extraordinary consulting fees.

As we shall see, it took the rest of us a long time to realize this.

The Early 90ies: Cataclysm

My programming career really took off in the late 80ies and early 90ies – before I got bitten by the database bug. I used to write C/C++, LISP, Perl, Assembler (various), ML and even a bit of Visual Basic (sorry!) back then. Pardon my nostalgia, but in those “old days” it was expected that you were fluent in more than one programming language.

There were some common themes back then.

First of all, we took pride in killing mainframes. We saw the old green/black terminals as an early, and failed, attempt at computing – dinosaurs that had to be killed off by the cataclysm of cheap, x86 compute power (or maybe RISK processors, though I never go around to using them). We embraced new programming languages and the IDE with open arms. We thought we succeeded: IBM entered a major crisis in the 80ies for the first time in their long and proud history. However, it could be argued that it was IBM as a company that failed, not the mainframe. There are still a lot of mainframes alive today, some of them have not been turned off since the 70ies and they run a large part of what we like to call civilisation. As a computer scientist, you have to tip your hat to that.

Another theme was a general sense of quick money. IBM had a lot of fat on their organization, and all that cost had to go somewhere: It ended up as MIPS charges to the customer. This made mainframes so expensive that it was easy to compete. It was the era of the “shallow port”. ERP systems running on ISAM style “databases” were ported 1-1 to relational databases on “decentral platforms” – aka: affordable machines. Back then, this was much cheaper than running on the mainframes and it required relatively little coding effort.

The results of shallow ports was code strewn with cursors. I suspect that a lot of our hatred towards cursors is from that time. People would do incredibly silly things with cursors, because that was the easy way to port mainframe code. Oracle supported this shallow port paradigm by improving the speed of cursors and introducing the notion of sequencers and Row ID, which allows even the database ignoramus to get decent, or should we say: “does not suck completely”, performance out of a shallow port. If you hook up a profiling tool to SAP or Axapta today, you can still see the leftovers from those times.

Late 90ies: All Abstractions are Leaky

Just as proper relational data models were beginning to take off, and we began realizing the benefits of cost based optimizers and set based operations, something happened that would throw us into a dark decade of computer science. As with all such things, it started well intentioned: A brilliant movement of programmers began to worry about encapsulation and maintainability of large code bases. Object oriented (OO) programming truly took off, it was older than that, but it now had critical mass.

The KoolAid everyone drank was that garbage collection, high level (OO) languages, IDE support and platform independence (P code/Byte Code) would massively boost productivity and that we never had to worry about low level code, storage and hardware again. To some extend, this was true: we could now trains armies of cheap programmers and throw them at them at problem (I that ironic way history plays tricks on us, the slang term was: The Mongolian Horde Technique). We also had less need to worry about ugly memory allocations and pointers – in the garbage collector we trusted. Everything was a class, or an object, and the compilers better damn well keep up with that idea (they didn’t). The champion of them all: JAVA, suffered the most under this delusion. C/C++ programmers became an endangered species. Visual Basic programmers became almost well respected. And, to please people who would give up pointers and malloc, but not curly brackets, a brilliant Dane invented C#.

At my university Danes, proud of our heritage, even invented our own languages supporting this new world (

Mjolnir Beta if anyone is

still interested). Everyone was high on OO. A natural consequence of this was that relational database had to keep up. If I could not squeeze a massive object graph into a relational database, that was a problem with the database, not with OO. Relational databases were not really taught at universities, it was bourgeois.

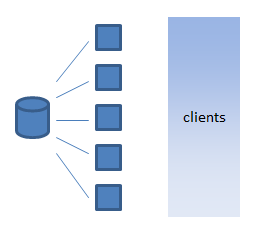

This was the dawn of object databases and the formalization of the 3-tier architecture. Central to the this architecture was the notion that scale-out was mostly about two things:

- Scaling out the business logic (ex: COM+, Entity Beans)

- Scaling out the rendering logic (ex: HTML, Java Applets)

We still hadn’t figured out how to fully scale databases, though most database vendors were working on it, and there were expensive implementations that already had viable solutions (DB2 and Tandem for example). What we called “Scale-out” in the 3-tier stack was functional scale, not data scale: Divide up the app into separate parts and run those parts on different machines.

I suspect we scaled out business logic because, we believed (I did!) that this was where the heavy lifting had to be done. There was also a sense that component reuse was a good thing, and that highly abstract implementations of business logic (libraries of classes) facilitated this. Of course, we did not see the dark side: that taking a dependency on a generic component, tightly coupled you to release cycles of that component. And thus was born “DLL-hell” and an undergrowth of JAVA protocols for distributed communication (ex:

CORBA,

JMS,

RMI)

Moving business logic to components outside the database also created a demand for distributed transactions and frameworks for handling it (ex:

DTC). People started arguing that code like this was the Right Way™ of doing things:

Begin Distributed Transaction

MyAccount = DataAccesLayer.ReadAccount()

if withdrawAmount <= accountBalance then

MyAccount.Balance = accountBalance – withdrawAmount

MyTransaction = DatabaseAccessLayer.CreateTran()

MyTransaction.Debit = withDrawAmount

MyTransaction.Target = MyAccount

MyTransaction.Credit = withDrawAmount

MyTransaction.Source = OtherAccount

MyTransaction.Commit

else

Rollback Transaction

Throw “You do not have enough money!”

end

Commit Distributed Transaction

You get the idea… Predictably, this led to chatty interfaces. Network administrators started worrying about latency, people were buying dark fibers like there was no tomorrow. Database administrators were told to fix the problem and tune the database, which was mostly seen as a poorly performing file system. Looking back, it was a miracle that noSQL wasn’t invented back then.

Since this was the dawn of the Internet, scale out rendering made a lot of sense. It was all about moving fat HTML code over stateless protocols. It was not unreasonable to assume that you needed web farms to handle this rendering, so we naturally scaled out this part of the application. Much later, someone would invent AJAX and improve browsers to a point where this would become less of a concern.

We were high on compute power and coding OO like crazy. But like the Titanic and the final end of the Victorian technology bubble, we never saw what was about to hit us.

Y2K: Mediocre, But Ambitious

The new millennium had dawned (in 2000 or 2001, depending on how you count) and people generally survived. The mainframes didn’t break down, life went on. But a lot of programmers found themselves out of work.

In this light, it is interesting to consider that programmers were considered the most expensive part of running successful software back then. JAVA didn’t live up to its promise of cross platform delivery – it became: “Write once, debug everywhere” and people hated the plug-ins for the browser. While productivity gains from OO were clearly delivered, I personally think that IntelliSense was the most significant advance in productivity that happened between 1995 and 2005 (it takes away work from typing, so I can use it on thinking).

Professional managers, as the good glory hunters they are, quickly sniffed out the money to be made in computers during the tech bubble. As these things go, they invented a new term: “IT” that they could use to convince themselves that once you name something, you understand it. It was argued that we needed to “

build computer systems like we build bridges”, but the actions we took looked more like “building computer systems like we butcher animals”. The capitalist conveyor belt metaphor, made so popular by Henry Ford and “enriched” by the Jack Welsh’ish horror regime of the 10-80-10 curve, eventually led to the predictable result: Outsourcing.

Make no mistake, I am a great fan of outsourcing. I truly believe in the world-wide, utilitarian view of giving away our technology, knowledge, money and work to poor countries. It is a great way to equalize opportunities in the world and help nations grow out of poverty – fast. In fact, I think we need to start outsourcing more to Africa now. Outsourcing it is the ultimate, global socialist principle.

The problem with outsourcing isn’t that we gave work away to unqualified people in Asia and Russia – because we didn’t – those people quickly became great, and some of the best minds in computer science were created there. Chances are that you are reading this blog on a software written by Indians.

The problem with outsourcing was that it led to a large portion of westerners artificially inflating the demand for Feng Shui decorators, lifestyle coaches, Pilates instructors,

postmodern “

writers”, public servants and middle level management. These days, Europe is waking up to this problem.

But then again, if we send the jobs out of the country, all the unemployed have to do something with their time. Perhaps it is no coincidence that the computer game industry soon outgrew the movie industry. You can after all waste a lot more time playing

Skyrim (amazing by the way) than watching the latest

Mission Impossible, and you don’t have to support Tom Cruise’s

delusions in the process

Sorry, I ramble, somebody stop me. Yet, the fact is that a lot of companies wasted an enormous amount of money sending work outside the country, dealing with cultural differences and building very poor software. One of the the results (again a big equalizer, this time of life expectancy) was that numerous people must have died from mal-practice as the doctors were busy arguing about the latest way to AVOID building a centralized, and properly implemented, Electronic Patient Journal/Record system. As far as I know, that battle is still with us.

The Rise of the East: Nothing interesting to say about XML

Once you have latched on to the idea of a fully componentized code base, it makes sense to standardize on the protocols used for interoperability. Just when we thought we couldn’t waste any more compute cycles – someone came up with SOAP and XML.

This led to a new generation of architects arguing that we need Service Oriented Architectures (SOA). There are very few of those today (both the architects and the systems), but the idea still rings true and for the customers who have adopted it. And it IS very true: a lot of things become easier if you can agree on XML as an interchange format. We also saw the rise of database agnostic data access layers (DAL). Everyone was really afraid to bind themselves to a monopoly provider.

I don’t think it is a coincidence that open standards and the rise of Asia/Eastern Europe/Russia coincided with *nix becoming very popular. The critical mass of programmers, as well as the interoperability offered by new and open standards, made it viable to build new businesses and software. The East has a cultural advantage of teaching mathematics in school at a level unknown to most westerns – I suspect it will be the end of our Western culture if we don’t adopt. Good riddance..

And the problem was again the over interpretation of a new idea. Just because XML is a great interchange format does not mean it is a good idea to store data in that format. We saw the rise (and fall) of XML database and a massive amount of programmer effort wasted on turning things that work perfectly well (SQL for example) into XML based, slower, versions of the same thing (XQuery for example). But something was true about this, and it lingered in our minds that SQL didn’t address everything, there WAS a gap: Unstructured data…

All the while, we were still high on compute power. Racking blade machines with SAN storage like there was no tomorrow. The term “server sprawl” was invented – but no one who wrote code cared. We continued to believe all that compute power was really cheap. Moore’s Law just kept on giving.

The end of the Free Lunch: Multi Core

But around 2005, it was the end of it. CPU clock speed stopped increasing. Throwing hardware at bad code was no longer an option. In fact, throwing hardware at bad code made it scale WORSE.

Those of us who had flirted with scale-out in the 90ies, and failed miserably, got flashbacks. Predictably, we acted like grumpy old men: angry and bitter that we didn’t get the blonde while she was still young and hot. Oracle became hugely successful with RAC, and the idea of good data modeling that actually has to run on hardware came back on the table, snatched from the hands of the OO fans while they were distracted by UML. People started arguing that perhaps, some of that business logic we all loved scaling out (functional scale-out, mind you), DID belong in the database after all. Thus came SQLCLR and JAVA support in Oracle and DB2. Opportunistic companies started publishing data models and selling them for good money, it was believed that if ONLY we could do 3NF modeling of data, all would be well. Inmon followers were high on data models!

People demanded automatic scale and everyone in the know, knew that this was not going to work without a fair amount of reengineering. But of course, we continued to pay homage to the idea – people don’t like their illusions broken.

While everyone was busy wrapping their head around scale-out, the infrastructure guys were beginning to show signs of panic. Big banks were complaining (and still are) that their server rooms were overflowing with machines. Their network switches were not keeping up anymore and the SAN guys went into denial: “You may measure a problem in Windows, but that is Windows who can’t do I/O, I see no problem in the SAN”. All our XML, OO and lazy code and CPU greed had started to take its toll on the environments. Somebody started talking about “Green Computing” – damn hippies!

Flashback: Kimball’s Revenge

While Teradata was busy selling “magic scale out” engines that had made great progress in this space, Kimball followers were spraying out Data Marts and delivering value in less time than it took the “EDW people” to do a vendor evaluation. The Kimball front got themselves a nice weapon of mass destruction with the Westmere and Nehalem CPU cores: cheap, powerful CPU power that took up very little space in the server room and didn’t need any special network components. It was all in-process on the same mainboard. Itanium (IA-64) went the way of the Alpha chip, the final realization that most code out there truly sucks: high clock speed beat elegant architectures.

Finally, a single machine with lots of compute power, no magic scale-out tricks, could solve a major subset of all warehouse problems (and interestingly most of the classic OLTP problems too). Vendors started digging out old 1970 storage formats that had a great fit with Kimball. Column stores and bitmap indexing got a revival. We saw the rise of Neteeza, Redbrick, Analysis Services, TM1, DB2 OLAP Views and ESSBase. This trend continues today, for example with Vertica and the Microsoft VertiPaq engines. there is even a great website dedicated to it:

The BI Verdict.

Data kept growing though, and today we are seeing an interest in MPP, Kimball style, warehouses promising truly massive scale. Scale-out and -Up combined in a beautiful harmony.

But of course, social media beat us all to it. No matter how much data we tried to cram into the warehouse, the world could spam it faster than we could consume it.

BigData: More Pictures of Cats than you can Consume

Our tour of history is nearly at an end – bear with me a little longer. Today, the old dream of handling unstructured data is becoming reality – but perhaps a nightmare for some.

With BigData and HADOOP, we have a new architecture that for the first time makes some promises we can start believing:

- Storage is TRULY cheap and massive (But you have to live with SATA DAS, not SAN)

- Unstructured data, and queries on it, can be run in decent time (Not optimally fast, but good enough)

- Semi automated scale is doable (but only if you know how to write Map/Reduce or use expression trees)

This means that we can now drink the barrage of data coming from the Internet. Of course, human nature being what it is, a lot of this data is pure noise. In fact, the signal to noise ratio is scary on the Internet – I can think of no better word for it.

Among all the noise of pictures of cats, tweets and re-tweets about toilet visits, and latest news about Britney Spear’s haircut, there is something we treasure: Knowledge about human nature. The problem that BigData helps us address is that we don’t always know how to find the needle in the haystack. If we build BigData installations, we can keep the data around, unafraid of losing it or burning all our money, while we dig for that insight we so desperately seek about our customers and the world around us.

I have written elsewhere about the great fit between BigData and Data Warehouses. But I would like to reinforce my point here: Once you know the structure of the data you are looking for, by all means model it and put it in a warehouse for fast consumption. If you don’t know what you are looking for yet (or if you can wait for it), put it in BigData.

Just be careful, there is a price associated with choosing temporary ignorance over knowledge seeking.

The Cloud: Grow or Pay!

Remember those infrastructure people who complained about server rooms overflowing? Do I hear your growls of anger? As architect/programmer/DBA, I think we often have felt the frustration of dealing with infrastructure departments (in my case the SAN guys take the price). “Why can’t those people just rack the damn server and be done with it – instead of letting me wait 3 months?” we ask ourselves as we again bang our fist into the table in frustration.

But the fact of the matter is that those server rooms ARE overflowing and overheating all over the world. Handling all the complicated logistics of racking and patching servers is expensive, and that is a direct consequence of our greed for compute power. Those infrastructure people are just trying to survive the onslaught of the server sprawl – give them a break. They respond by virtualizing (SAN and virtual machines) and they fight back when we ask for hardware that is non-standard or “not certified”.

Perhaps it is time we look ourselves in the mirror and ask if we really need to waste all those CPU cycles on overly complex architectures that protect us from ourselves and our inability to properly analyse what we plan to do with data. Every time we rack a new server, power consumption goes up in the server room and it gets just a little warmer in there. We are incurring a long term cost in power and cooling by writing poor code and designing overly flexible architectures. At the end of the day, a conditional branch in the code (an “if statement”), metadata and dynamically typed, interpreted code costs CPU, and if we want to be flexible, we often need a lot of that.

If there is one thing we have learned from mainframes, it is that code lives nearly forever. Especially when it is so bad that no one dares to touch it, or so complex that no one understands it.

If it makes your day, think of this as a green initiative: you are saving the planet by writing better code and saving power. But of course, such tree hugger mentality gets us nowhere in the modern business world, so how about this instead: with the dawn (overcast?) of the cloud, compute cycles will once again be billed direct to you: the coder and DBA!

MIPS are back in a new form, and it again makes good business sense to write efficient code. In fact, it always made good sense (even in the 90ies), but all those costs you were incurring from writing bad code or forcing data into storage formats that are unnatural (e.g. XML) has so far been hidden in your corporate balance sheet. With the cloud, that cost is about to uncloak itself, and like a Klingon battleship – this might leave you in trouble!

Summary: What history Taught Us

If you are still with me here, thank you for putting up with my tantrums. It is time to wrap this up. What has history taught us about architectures and how we go about storing data?

I like to think of computing as evolutionary, not revolutionary. There are very few major breakthroughs in computer science (perhaps with the aforementioned exception of IntelliType) that don’t arrive with a lot of advance warning, and grow up slowly from many failed attempts at implementations. For example, column stores are super hot today, but they were invented back in the 70ies.

Let us have a look at the “evolutionary tree of life” for data storage engines:

Above is of course a gross over-simplification, and you could argue about where the arrows should connect. But no matter where those arrows lead, it makes the point I want to make: That nearly all of these technologies are still around today and very much alive (perhaps with the exception of XML databases, but those were pretty silly to begin with). In fact, most large organisations have most of them around.

Are all these technologies just alive because they have not yet been replaced with the latest and greatest? Is noSQL, HADOOP or some other new product bound to replace them all over time?

I think the different technologies are there for a good reason. A reason very similar to why there are many species of animals in the world. Each technology is evolved to solve a certain problem, and to live well in a specific environment. When you target the right technology to a certain problem (for example column stores towards dimensional models) you solve that problem elegantly and with the least amount of compute cycles – preserving energy. Very soon, solving problems with a low number of compute cycles in elegant ways is going to really count.

While you might be able to solve most of the structured DW data problems with HADOOP, you eventually have to ask: Is a generic map/reduce paradigm really the way to go about that?

Humans have a strange way of wanting a single answer to the big and complex question in life, and we waste significant time searching for it. Entire cults of management theories (and I use that term lightly) and religions are build around these over simplifications. We seek simple answers to complex question, and as IT architects, this can lead us down a path of believing in “killer architectures”.

What history has taught us is that such killer architectures do not exist, but that the optimal strategy (just like in nature) depends on which environment you find yourself in. Being an IT architect means rising above all these technologies, to see that big picture, and resisting the temptation to find a single solution/platform for all problems in the enterprise. They key is to understand the environment you live in, and design for it using a palette of technologies.

Staying in the metaphor, this leads me to another conclusion: Just like evolution made us store fat on our bodies in different places depending on the usage (thank goodness) you also have to consider the option of storing your data in more than one place. Having a bit of padding all over the body is a lot more charming (and healthy) than a beer belly